See all posts

A deep dive into swerve

Going down a rabbit hole with FIRST robotics programming

December 31, 2024

This is not meant to be a tutorial — please read with some salt.

I wrote this quickly to share my learnings before year-end. While the content may be rough around the edges, I plan to refine it over time.

Our FRC team recently bought some swerve modules — MAXSwerve, to be exact. After putting them together, I decided to dive into the code behind them. Here's what I learned over a weekend (plus a few more nights). I've simplified what I originally shared with my team here.

What’s this FRC thing?

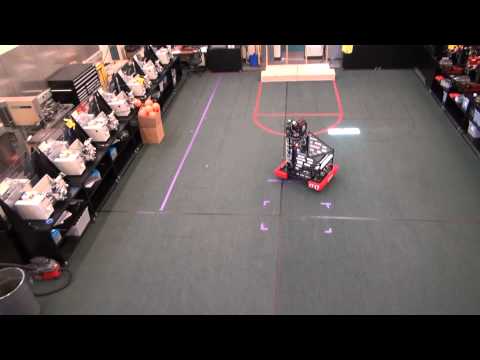

I should probably explain this before we start. FIRST Robotics Competition brings together teams of high school students to build large-scale robots and compete in challenges (and no, it's not like Battle Bots). Working alongside adult mentors, we design, build, program, and test a brand new robot each year to tackle FIRST's annual challenge. For 2025, we know the theme revolves around the ocean, but we won't get the full details until January 4, when the season kicks off. Here's what last year's game looked like:

Moving the robot

A robot's drivetrain uses spinning wheels powered by motors to move around. While there are several types of drivetrains, I'll give a quick overview of a few before diving into our main focus: swerve drive.

Tank drive

Tank drive is the most basic and most common drivetrain type. Like a tank, it uses two sets of wheels — left and right — that control movement and rotation. The robot moves forward when both sets spin forward, and rotates when the sets spin in opposite directions.

Tank drive is simple to build and program, but it has one key limitation: the robot can only move in the direction it's facing (forwards and backwards). This constraint becomes important when comparing it to other drivetrains.

Mecanum drive

Mecanum drive is uncommon in FRC. It uses special omnidirectional wheels that can move the robot forwards, backwards, sideways, and rotate. Each wheel applies force at a 45-degree angle, and when multiple wheels spin together, their force vectors partially cancel each other out.

While mecanum drive offers an affordable way to achieve omnidirectional movement, it has drawbacks. The canceling forces reduce power, and the rollers on the wheels provide less friction than standard wheels.

Swerve drive

Swerve drive is the most common way to achieve omnidirectional movement. By physically rotating each wheel (like a shopping cart's caster wheels), the robot can drive and rotate in any direction.

While swerve drive is both expensive and the most complex to program, it offers unmatched speed and maneuverability.

A swerve chassis consists of four swerve modules (one is shown above). Each module has two key functions: steering (changing the wheel's direction) and driving (spinning the wheel). Two motors handle these functions—one for steering and one for driving—and an encoder tracks the wheel's direction.

To the folks in FRC: Yes, differential swerve exists, but it is no longer used due to recent rule changes.

The math behind swerve

Team 2767 Stryke Force has an awesome slide deck on swerve drive. Most things in this section is based off of theirs. They have a ton more detail into this. Link

In FIRST robotics, the coordinate system follows a standard convention: counterclockwise rotation is positive, and the Z-axis points upward. Here's how the axes appear when viewed from above:

A swerve robot has three degrees of motion:

- Two translational: X and Y

- One rotational: Yaw (around the Z axis)

Since robots typically don’t fly, we ignore the Z axis. We use θ (theta) to represent angular position and ω (omega) to represent angular velocity.

We can translate each of these degrees of motion into specific movements for each swerve wheel:

These velocity vectors illustrate the robot's movement. The purple arrows show the robot's overall direction and speed, while the red arrows indicate how each wheel moves individually.

Just like regular vectors, we can combine these movement vectors. For example, if I want the robot to move forwards and to the right with equal speed:

This same principle applies to rotation. For example, if we want the robot to move forwards and right while rotating clockwise:

So what does all this mean in practice?

Suppose I want the robot to move in a specific way (including rotation). We'll call this the target robot velocity vector. This target can be broken down into simpler velocity vectors. By calculating the wheel velocity vectors for each wheel and combining them, we can determine the final target wheel velocity vector for each wheel.

Some more considerations

The length of each velocity vector indicates speed, with a length of 1 representing 100% (max speed). After calculating the wheel vectors, their lengths can exceed 1. In the example above, all speeds except the bottom right are greater than 1, even though each individual wheel velocity vector has a length of 1.

A simple solution is to divide all speeds by the highest speed to normalize them. Here's what that looks like with our example:

Wheel | Calculated Speed | Normalized Speed |

Top left | 2.414 | 1.0 |

Top right | 1.732 | 0.717 |

Bottom left | 1.732 | 0.717 |

Bottom right | 0.414 | 0.172 |

The angle of the wheels can be optimized. Rather than turning 180 degrees, simply reversing the wheel's spin direction achieves the same result. This means wheels never need to rotate more than 90 degrees.

In practice, the wheels take time to turn, causing a slight natural lag. To minimize this effect, the wheel speed can be multiplied by the cosine of the angle error. This cosine compensation technique effectively reduces directional skew during turns.

For example, when a wheel is perpendicular to its target direction: . The wheel stops spinning.

When the wheel points exactly where it should: . The speed remains unchanged.

Field oriented

Right now, our target robot velocity vector is robot-relative. This means the robot moves relative to its own orientation—when you command it to go left, it moves left from wherever it's pointing.

But what if we want the robot to move relative to the field instead? With field-oriented drive, "forward" always means away from the driver, no matter which way the robot faces. This lets the robot rotate while moving in any direction—super fun! Here's a cool video from team 1717 Uncut demonstrating their field-oriented swerve drive:

The theory behind field-oriented control is simpler than you might expect. A gyro added to the robot measures its angular offset. By offsetting all swerve modules in the opposite direction by the same amount, we effectively "cancel out" the robot's rotation.

However, there's a key detail: the rotational velocity vector should not be offset, since the robot's rotational velocity is always relative to itself and unaffected by field orientation.

When we convert robot velocity vectors into wheel velocity vectors, we simply need to rotate the X and Y directional vectors—that's the main principle!

As mentioned earlier, swerve modules slightly lag behind their desired positions. During simultaneous movement and rotation, this lag causes the robot to travel in a slightly slanted path. A simple fix is to add or subtract a percentage of the angular velocity, deliberately programming a slanted line that results in straight motion.

Tools that I’ll be using

Before diving into the code, let me introduce the key tools I'll be using. I'll explore most of these in more depth later. Note that I won't cover Driver Station (which connects to the robot and lets you control it) or VS Code and the WPILib API (used to interact with robot electronics).

AdvantageScope

AdvantageScope comes pre-installed with WPILib and provides real-time visualization of robot telemetry data. Its built-in tools offer far better insights than traditional console logs.

AdvantageKit

AdvantageKit is an optional logging framework that works seamlessly with AdvantageScope. It offers streamlined logging capabilities and enables data playback for simulation purposes.

PathPlanner & PathPlannerLib

PathPlanner is a graphical interface for creating autonomous driving paths. Its companion library, PathPlannerLib, handles all the necessary robot-side calculations.

maple-sim

maple-sim is an FRC physics engine that simulates robots, subsystems, and projectiles. Its standout feature is its ability to calculate collisions for both robots and game pieces, enabling highly accurate virtual testing.

PhotonVision & PhotonLib

PhotonVision is a computer vision system for FRC that runs on a co-processor with PhotonLib. PhotonLib can simulate camera views, letting you test vision systems virtually.

Putting it together in code

This section assumes prior FRC programming knowledge and may be challenging to follow without it. The explanations here are meant to be read alongside the completed code — they focus on the reasoning behind implementation choices rather than providing step-by-step instructions. Due to the complex nature of the content, a detailed tutorial format wasn't practical.

If you're new to FRC programming, I strongly recommend starting with the FRC 0 to Autonomous series.

All code is available in the various branches on GitHub: Link

This code uses 2024 versions of all tools and libraries, unless noted otherwise.

Moving one module

Let's start simple by controlling a single wheel. I'll make it follow the joystick position: pushing up points the wheel forward, down points it backward, and the further you push, the faster it spins. Code for this section

REV provides a handy

MAXSwerveModule class that handles all the low-level motor control code. I won't delve into its details, but it's thoroughly documented.The

MAXSwerveModule class lets us control the swerve module by providing it with a SwerveModuleState. This state represents the wheel velocity vector mentioned earlier.In our own

DriveTrainSubsystem class, I expose this functionality while reducing the speed by 80% for safer testing.public void setSwerveState(SwerveModuleState state) { SwerveModuleState desiredState = new SwerveModuleState(); desiredState.speedMetersPerSecond = state.speedMetersPerSecond * 0.2; desiredState.angle = desiredState.angle; m_swerveModule.setDesiredState(state); }

I've also added methods like

resetEncoders and getState to provide basic module control.Let's create a

SwerveDriveCmd to expose this subsystem method and make it runnable. To prevent unwanted movement, we'll add logic that keeps the wheel from resetting to its zero position when it's not actively spinning.@Override public void execute() { SwerveModuleState desiredState = new SwerveModuleState(velocityFunction.get(), rotationFunction.get()); // Only change angle if it is moving if (desiredState.speedMetersPerSecond == 0) { desiredState.angle = driveSubsystem.getState().angle; } driveSubsystem.setSwerveState(desiredState); }

This command can then be used in

RobotContainer and bound to a controller.driveSubsystem.setDefaultCommand( new SwerveDriveCmd( driveSubsystem, () -> Math.sqrt( Math.pow(MathUtil.applyDeadband(controller.getRawAxis(OIConstants.kDriverControllerXAxis), OIConstants.kDriveDeadband), 2) + Math.pow(MathUtil.applyDeadband(controller.getRawAxis(OIConstants.kDriverControllerYAxis), OIConstants.kDriveDeadband), 2) ), () -> new Rotation2d(Math.atan2( MathUtil.applyDeadband(controller.getRawAxis(OIConstants.kDriverControllerXAxis), OIConstants.kDriveDeadband), MathUtil.applyDeadband(controller.getRawAxis(OIConstants.kDriverControllerYAxis), OIConstants.kDriveDeadband) )) ) );

And… that’s it! It should work now provided you’ve set up your swerve module correctly!

Note that this code structure isn't ideal - the joystick input conversion logic (the sqrt, atan, pow calculations) should really be in the subsystem or command class. I took a shortcut here.

Two (or more) modules

We can actually get a robot working with just two swerve modules (as long as we add a third point of support like a caster wheel). We're using only two modules (front left & back right) to minimize potential damage - these modules aren't cheap! Code for this section

Building on our previous work, we're now exposing two modules through

DriveTrainSubsystem.We'll implement all the math logic in

SwerveDriveCmd. First, we create the "base vectors" that convert velocity vectors into wheel velocity vectors. Since there's no straightforward way to represent a 2D vector, we're using Translation2d - it serves the same purpose. // base vectors (front left, back right) Translation2d baseXVec[] = {new Translation2d(1, 0), new Translation2d(1, 0)}; Translation2d baseYVec[] = {new Translation2d(0, 1), new Translation2d(0, 1)}; Translation2d baseRotVec[] = {new Translation2d(-1, 1), new Translation2d(1, -1)};

We can then calculate the target wheel velocity vectors.

Translation2d frontLeftVec = baseXVec[0].times(xVelFunction.get()).plus(baseYVec[0].times(yVelFunction.get())).plus(baseRotVec[0].times(rotVelFunction.get())); Translation2d backRightVec = baseXVec[1].times(xVelFunction.get()).plus(baseYVec[1].times(yVelFunction.get())).plus(baseRotVec[1].times(rotVelFunction.get()));

We then calculate the magnitude and direction for our desired swerve module state.

SwerveModuleState desiredStateFrontLeft = new SwerveModuleState(frontLeftVec.getNorm(), frontLeftVec.getAngle()); SwerveModuleState desiredStateBackRight = new SwerveModuleState(backRightVec.getNorm(), backRightVec.getAngle());

Then, we can normalize the speeds to ensure everything is under 100%

Double maxVel = Math.max(desiredStateFrontLeft.speedMetersPerSecond, desiredStateBackRight.speedMetersPerSecond); if (maxVel > 1) { desiredStateFrontLeft.speedMetersPerSecond /= maxVel; desiredStateBackRight.speedMetersPerSecond /= maxVel; }

We've been using percentages for speed since they make the math simpler, but the actual speed needs to be in meters per second. Let's convert our percentage values to the proper units.

desiredStateFrontLeft.speedMetersPerSecond *= DriveConstants.kMaxSpeedMetersPerSecond; desiredStateBackRight.speedMetersPerSecond *= DriveConstants.kMaxSpeedMetersPerSecond;

When the robot stops moving and its speed drops to zero, the swerve module tries to reset to its default position of 0. To prevent this unwanted rotation, we keep the wheel at its last position when the speed is near zero by storing the previous angle in

prevAngle.if (desiredStateFrontLeft.speedMetersPerSecond <= 0.05) { desiredStateFrontLeft.speedMetersPerSecond = 0; desiredStateFrontLeft.angle = prevAngle[0]; } if (desiredStateBackRight.speedMetersPerSecond <= 0.05) { desiredStateBackRight.speedMetersPerSecond = 0; desiredStateBackRight.angle = prevAngle[1]; } prevAngle[0] = desiredStateFrontLeft.angle; prevAngle[1] = desiredStateBackRight.angle;

Yes, I forgot to add cosine compensation. It’ll be in the next part.

Now we need to feed the controller inputs into the command in

RobotContainer. Note that the x and y directions are flipped and negated due to different axis conventions between the controller and robot.driveSubsystem.setDefaultCommand( new SwerveDriveCmd( driveSubsystem, () -> MathUtil.applyDeadband(-controller.getRawAxis(OIConstants.kDriverControllerYAxis), OIConstants.kDriveDeadband), () -> MathUtil.applyDeadband(-controller.getRawAxis(OIConstants.kDriverControllerXAxis), OIConstants.kDriveDeadband), () -> MathUtil.applyDeadband(-controller.getRawAxis(OIConstants.kDriverControllerRotAxis), OIConstants.kDriveDeadband) ) );

That’s it!

Two (or more) modules — the IO Interface

IO Interface

Let's explore IO Interfaces, which use a significantly different code structure.

Let's look at how code traditionally runs on a robot:

Data flows into the "robot logic", gets processed, and outputs emerge at various points in the code. Though this linear structure seems straightforward, it complicates monitoring and logging the system's state. There's also a risk that sensor data may be unavailable when needed (for example, if vision processing hasn't completed), forcing you to manually track and store past data.

Here's how we can structure code using an IO interface:

"Does this really need to be this complicated?" Don't worry—it'll make more sense once we start coding.

Essentially, we abstract everything flowing into and out of the subsystem logic into one central place: the IO Class. This structure enforces accurate logging, since inputs remain unmodified until they're logged, and outputs are converted into a common format for logging before being translated into hardware-specific values.

Since it's an interface implementation, we can swap out the IO class while preserving everything else, and the code will continue working. This is a huge advantage—it lets us easily switch between code for real hardware and simulated hardware.

Clarification on inputs & outputs:

- Inputs: Everything that flows into the robot logic, including encoder values, limit switches, and vision data

- Outputs: Everything that the robot logic sends to the hardware, such as target positions and voltages

Since inputs remain unprocessed, their logging stays free from any potential code errors.

But why have a hardware class? Why not let the subsystem call methods directly on the IO class? While this is possible, the IO class should only handle hardware-specific code (like for Spark or Talon motor controllers). The subsystem should output data in an easily loggable format (such as a

Pose2d rather than raw encoder values). Putting calculations in the hardware class lets us reuse common operations. A subsystem might need multiple instances of the hardware class (swerve drive need multiple modules). This pattern isn't always necessary though—a simple device like a gyro might not need a hardware class since it has minimal methods beyond calibration.Back to the code

Let's define the IO interface for the swerve module,

ModuleIO. The interface defines inputs that other code can read through an updateInputs method, and setters for hardware control. We'll keep it hardware-agnostic by excluding any device-specific code. public interface ModuleIO { @AutoLog public static class ModuleIOInputs { public SwerveModulePosition position = new SwerveModulePosition(); public SwerveModuleState state = new SwerveModuleState(); } public default void updateInputs(ModuleIOInputs inputs) {} public default void setState(SwerveModuleState state) {} public default void resetEncoders() {} }

Note that I added the

@AutoLog annotation, which allows AdvantageKit to automatically log all values in the inputs.Next, let's implement the hardware-specific version of this interface: the IO class,

ModuleIOMAXSwerve. Since this class contains only MAXSwerve-specific code and is quite lengthy, I'll link to it rather than include it here.Note that parameters are allowed in the constructor. Also, the

setState method tells the module to move.We now create the hardware class,

Module, which contains most of the common code. The constructor takes an instance of ModuleIO, and the class's periodic function updates and logs its inputs. By using ModuleIO instead of ModuleIOMAXSwerve and leveraging interfaces, we can easily support different module implementations.public Module(ModuleIO io, int index) { this.io = io; this.index = index; } public SwerveModuleState runState(SwerveModuleState state) { // the code here is similar to what was done before in MAXSwerveModule.java's setDesiredState io.setState(optimizedDesiredState); return optimizedDesiredState; } public void periodic() { io.updateInputs(inputs); Logger.processInputs("Drive/Module" + Integer.toString(index), inputs); } // more code ...

The logger automatically logs inputs under

Drive/Module0, Drive/Module1, etc. Since this isn't a subsystem, the periodic function isn't an override. Instead, it's called by the subsystem's periodic method.

I'll skip the details of the gyro implementation. Since the gyro is straightforward, I omitted its hardware class. You can find the code in the repository.

The

Drivetrain subsystem will take in ModuleIO and create the hardware classes internally. Since the gyro has no hardware class, we simply store its IO.public Drivetrain(ModuleIO flModuleIO, ModuleIO brModuleIO, GyroIO gyroIO) { modules[0] = new Module(flModuleIO, 0); modules[1] = new Module(brModuleIO, 3); this.gyroIO = gyroIO; }

Since the gyro lacks a hardware class, we must handle its input updates and logging within the subsystem

@Override public void periodic() { gyroIO.updateInputs(gyroInputs); Logger.processInputs("Drive/Gyro", gyroInputs); // more code...

To implement field-oriented control, we subtract the gyro's measured angle from the translational vectors in the Module class to negate the robot's rotation. After this adjustment, we add the rotation vector back to maintain the desired turning motion.

Translation2d frontLeftVec = baseXVec[0].times(speeds.vxMetersPerSecond) .plus(baseYVec[0].times(speeds.vyMetersPerSecond)).rotateBy(gyroInputs.angle.times(-1)); frontLeftVec = frontLeftVec.plus(baseRotVec[0].times(speeds.omegaRadiansPerSecond)); Translation2d backRightVec = baseXVec[1].times(speeds.vxMetersPerSecond) .plus(baseYVec[1].times(speeds.vyMetersPerSecond)).rotateBy(gyroInputs.angle.times(-1)); backRightVec = backRightVec.plus(baseRotVec[1].times(speeds.omegaRadiansPerSecond));

The computation follows the same pattern as before, but now we invoke the

runState method from the Module class.for (int i = 0; i < desiredStates.length; i++) { modules[i].runState(state); }

We can record these states for debugging. Since I'm only using two modules, I've added two empty states to fill out the array.

Logger.recordOutput("SwerveStates/Setpoints", new SwerveModuleState[] { desiredStates[0], new SwerveModuleState(), new SwerveModuleState(), desiredStates[1] });

Now let's update our

SwerveDriveCmd to match this new structure. The command won't perform any calculations, but simply pass values through.public SwerveDriveCmd(Drivetrain dSub, Supplier<Double> xSup, Supplier<Double> ySup, Supplier<Double> wSup) { driveSub = dSub; xSupplier = xSup; ySupplier = ySup; omegaSupplier = wSup; addRequirements(driveSub); } @Override public void execute() { driveSub.runVelocity(new ChassisSpeeds(xSupplier.get(), ySupplier.get(), omegaSupplier.get())); }

RobotContainer needs updating as well. We'll provide the IO classes as inputs to the subsystem constructor.driveSub = new Drivetrain( new ModuleIOMAXSwerve(0), new ModuleIOMAXSwerve(3), new GyroIOADXRS450()); driveSub.setDefaultCommand( new SwerveDriveCmd( driveSub, () -> MathUtil.applyDeadband(-controller.getRawAxis(OIConstants.kDriverControllerYAxis), OIConstants.kDriveDeadband), () -> MathUtil.applyDeadband(-controller.getRawAxis(OIConstants.kDriverControllerXAxis), OIConstants.kDriveDeadband), () -> MathUtil.applyDeadband(-controller.getRawAxis(OIConstants.kDriverControllerRotAxis), OIConstants.kDriveDeadband)));

That wraps this section! You can view all the logged values in AdvantageScope's swerve tab by dragging them from the left panel. I've included the controller view as well. To connect to the robot, just check the File tab.

Simulation & path following

Here comes the exciting part — simulating all of this so we don’t need a real robot! Code for this section

Simplifying our code

Instead of performing the swerve drive calculations manually, we can use WPILib's built-in kinematics utilities to handle the math for us. I'll also upgrade from two modules to four, since that's what we'll use in competition.

The modules themselves remain unchanged, but we need to modify how we generate each swerve module's

SwerveModuleState in the Drivetrain subsystem.First, we need to define our drivetrain's kinematics in our constants file.

public static final SwerveDriveKinematics kDriveKinematics = new SwerveDriveKinematics( new Translation2d(kWheelBase / 2, kTrackWidth / 2), new Translation2d(kWheelBase / 2, -kTrackWidth / 2), new Translation2d(-kWheelBase / 2, kTrackWidth / 2), new Translation2d(-kWheelBase / 2, -kTrackWidth / 2));

In our

runVelocity function, we can now simplify all our previous vector math into a single line of code.SwerveModuleState[] states = DriveConstants.kDriveKinematics.toSwerveModuleStates(fieldOrientedSpeeds);

We still need to normalize these values to prevent them from exceeding the maximum speed. Note that we're now working with actual speeds in meters per second rather than percentages.

SwerveDriveKinematics.desaturateWheelSpeeds(states, DriveConstants.kMaxSpeedMetersPerSecond);

I've now implemented cosine compensation in our

Module class, which I had missed in the previous iteration.correctedDesiredState.speedMetersPerSecond *= correctedDesiredState.angle.minus(getAngle()).getCos();

Field-oriented driving is straightforward to implement. I've added a Boolean toggle to switch between field-oriented and robot-oriented driving.

Another important consideration: When modules rotate to new positions, they create a "skew" effect in field-oriented driving. To counteract this, we apply an opposite skew to the path. The faster the robot rotates, the more skew compensation is needed.

Rotation2d compensatedAngle = gyroInputs.angle.plus(new Rotation2d(gyroInputs.rate*0.05)); ChassisSpeeds fieldOrientedSpeeds = fieldRelative ? ChassisSpeeds.fromFieldRelativeSpeeds( speeds.vxMetersPerSecond, speeds.vyMetersPerSecond, speeds.omegaRadiansPerSecond, compensatedAngle ) : speeds;

For the command class, we now accept a

ChassisSpeed parameter instead of individual velocities. We'll handle the conversion in SwerveDriveCmd.@Override public void execute() { Double xVel = xSupplier.get()*DriveConstants.kMaxSpeedMetersPerSecond; Double yVel = ySupplier.get()*DriveConstants.kMaxSpeedMetersPerSecond; Double omega = omegaSupplier.get()*DriveConstants.kMaxAngularSpeed; Logger.recordOutput("Drive/Inputs/xVel", xVel); Logger.recordOutput("Drive/Inputs/yVel", yVel); Logger.recordOutput("Drive/Inputs/omega", omega); driveSub.runVelocity(new ChassisSpeeds(xVel, yVel, omega)); }

And everything should work the same as before!

Simulating components

The IO Interface structure makes it super easy for us swap parts for simulated stuff. Let’s start with the swerve module.

We'll create a

ModuleIOSim class that implements ModuleIO. This will closely mirror ModuleIOMAXSwerve.For motor simulation, WPILib provides a

DCMotorSim class. While the simulation isn't perfect and uses somewhat arbitrary constants, it gets the job done. You could calculate more precise values, but I didn't have a physical robot available for measurements.private final DCMotorSim driveSim = new DCMotorSim(DCMotor.getNEO(1), ModuleConstants.kDrivingMotorReduction, 0.025); private final DCMotorSim turnSim = new DCMotorSim(DCMotor.getNeo550(1), ModuleConstants.kTurningMotorReduction, 0.004);

In a real robot, the Spark MAX motor controller handles PID control automatically, but we'll need to implement this ourselves for the simulation.

PID: We can't just tell a motor to go to a specific position—we need to control its direction and voltage based on where it is and where it needs to go. Simply commanding a motor to turn until it reaches a target will cause it to overshoot. PID control allows motors to move smoothly to their target positions. Since every motor behaves differently, PID settings must be tuned for each one.

drivePID = new PIDController(ModuleConstants.kDrivingPSim.get(), ModuleConstants.kDrivingISim.get(), ModuleConstants.kDrivingDSim.get()); driveFeedforward = new SimpleMotorFeedforward(0.0, 0.1); turnController = new PIDController(ModuleConstants.kTurningPSim.get(), ModuleConstants.kTurningISim.get(), ModuleConstants.kTurningDSim.get()); turnController.enableContinuousInput(-Math.PI, Math.PI);

Feedforward provides an initial "push" to help get the system moving. The

continuousInput tells the controller that values wrap around—meaning represents the same position as 0.The simulation logic runs in the

updateInputs method since it executes periodically. We must still update the input values during each cycle.@Override public void updateInputs(ModuleIOInputs inputs) { drivePID.setP(ModuleConstants.kDrivingPSim.get()); drivePID.setI(ModuleConstants.kDrivingISim.get()); drivePID.setD(ModuleConstants.kDrivingDSim.get()); turnController.setP(ModuleConstants.kTurningPSim.get()); turnController.setI(ModuleConstants.kTurningISim.get()); turnController.setD(ModuleConstants.kTurningDSim.get()); driveSim.update(LOOP_PERIOD_SECS); turnSim.update(LOOP_PERIOD_SECS); inputs.position = new SwerveModulePosition( driveSim.getAngularPositionRad() * ModuleConstants.kWheelDiameterMeters / 2, new Rotation2d(turnSim.getAngularPositionRad()) ); inputs.state = new SwerveModuleState( driveSim.getAngularVelocityRadPerSec() * ModuleConstants.kWheelDiameterMeters / 2, new Rotation2d(turnSim.getAngularPositionRad()) ); }

Note that I'm using

getAngularPositionRad and getAngularVelocityRadPerSec to obtain the simulated values. The simulation includes some built-in delay and other realistic effects.Since the simulated motors accept voltage inputs, we'll use the PID controllers' output along with conversion factors to account for the gearing ratios and wheel diameter.

@Override public void setState(SwerveModuleState state) { driveSim.setInputVoltage(driveFeedforward.calculate(state.speedMetersPerSecond / (ModuleConstants.kWheelDiameterMeters / 2)) + drivePID.calculate(driveSim.getAngularVelocityRadPerSec(), state.speedMetersPerSecond / (ModuleConstants.kWheelDiameterMeters / 2))); turnSim.setInputVoltage(turnController.calculate(turnSim.getAngularPositionRad(), state.angle.getRadians())); }

We need to log the measured states from each module. These values should show a slight delay compared to the setpoints, since motors take time to reach their target positions. While this is technically a getter method, the

@AutoLogOutput annotation will handle automatically logging the returned values.@AutoLogOutput(key = "SwerveStates/Measured") public SwerveModuleState[] getModuleStates() { return new SwerveModuleState[] { modules[0].getState(), modules[1].getState(), modules[2].getState(), modules[3].getState() }; }

Let's simulate the gyro next. The

GyroIOSim class will implement the GyroIO interface, similar to how GyroIOADRS450 works.public class GyroIOSim implements GyroIO { private static final double LOOP_PERIOD_SECS = 0.02; private Double angle = 0.0; private Double rate = 0.0; public GyroIOSim() { } @Override public void updateInputs(GyroIOInputs inputs) { angle += rate * LOOP_PERIOD_SECS; inputs.connected = true; inputs.angle = new Rotation2d(angle); inputs.rate = rate; } @Override public void calibrate() { rate = 0.0; angle = 0.0; } public void updateAngularVelocity(double angularVelocity) { rate = angularVelocity; } public void updateAngle(double angle) { this.angle = angle; } }

Gyros measure angular velocity, so let's implement that in our simulation. Since we don't have actual velocity measurements to work from, I'll create a method that allows us to set this value manually.

private Double rate = 0.0; public void updateAngularVelocity(double angularVelocity) { rate = angularVelocity; }

We know

updateInputs runs periodically, so we can incrementally update our angle position. By default, the robot code loops every 0.02 seconds.private static final double LOOP_PERIOD_SECS = 0.02; private Double angle = 0.0; @Override public void updateInputs(GyroIOInputs inputs) { angle += rate * LOOP_PERIOD_SECS; inputs.connected = true; inputs.angle = new Rotation2d(angle); inputs.rate = rate; }

Next are methods to calibrate and directly set the gyro's angle.

@Override public void calibrate() { rate = 0.0; angle = 0.0; } public void updateAngle(double angle) { this.angle = angle; }

That's it for

GyroIOSim, but we still need to provide it with angular velocity data. Without actual sensor readings, we'll need to get creative. We can use kinematics to estimate the chassis's velocity vectors and extract the rotational component. This isn't a perfect solution since it assumes the robot's estimated position perfectly matches reality—which never happens in practice.Back in the

Drivetrain subsystem, let's create a helper function to calculate the ChassisSpeeds. Since the module states are robot-relative, this calculation will give us robot-relative speeds.public ChassisSpeeds getRelativeChassisSpeeds() { return DriveConstants.kDriveKinematics.toChassisSpeeds( modules[0].getState(), modules[1].getState(), modules[2].getState(), modules[3].getState() ); }

We can then use this calculated value to update our simulated gyro

ChassisSpeeds measuredChassisSpeeds = getRelativeChassisSpeeds(); // Simulation only if (gyroIO instanceof GyroIOSim) { ((GyroIOSim) gyroIO).updateAngularVelocity(measuredChassisSpeeds.omegaRadiansPerSecond); }

Since the IO Interface supports multiple gyro implementations, we need to verify that we're working with the simulated version.

Using simulated components

Thanks to the IO Interface, implementation is straightforward. A simple if statement in

RobotContainer lets us switch between simulated and real components.if (RobotBase.isReal()) { gyroIO = new GyroIOADXRS450(); driveSub = new Drivetrain( new ModuleIOMAXSwerve(0), new ModuleIOMAXSwerve(1), new ModuleIOMAXSwerve(2), new ModuleIOMAXSwerve(3), gyroIO); } else { gyroIO = new GyroIOSim(); driveSub = new Drivetrain( new ModuleIOSim(), new ModuleIOSim(), new ModuleIOSim(), new ModuleIOSim(), gyroIO); }

When running the simulation in AdvantageScope, you can see the lag between the target states and the actual measured states.

Odometry

Odometry is a method of pose estimation that tracks where the robot is based on sensor data. By measuring how far and in which direction the wheels have moved, we can calculate the robot's pose relative to its starting point. A pose describes both the robot's position (translation) and orientation (rotation) in space. We can use odometry as a starting point to visualize the robot's pose in an idealized environment.

This isn’t perfect, wheel slippage causes the position estimate to become increasingly inaccurate over time.

WPILib makes odometry really easy. Let's modify some code in our

Drivetrain subsystem.SwerveDriveOdometry odometry = new SwerveDriveOdometry( DriveConstants.kDriveKinematics, new Rotation2d(), new SwerveModulePosition[] { new SwerveModulePosition(), new SwerveModulePosition(), new SwerveModulePosition(), new SwerveModulePosition() } );

SwerveModulePosition is similar to SwerveModuleState, but instead of tracking velocity, it measures the distance the wheel has traveled. Odometry uses these wheel displacement measurements to estimate the robot's position.To track the robot's position, we must continuously update the odometry calculations using the latest module positions and gyro angle readings

@Override public void periodic() { // other code ... Pose2d pose = odometry.update( gyroInputs.angle, getModulePositions() ); // other code... } public SwerveModulePosition[] getModulePositions() { return new SwerveModulePosition[] { modules[0].getPosition(), modules[1].getPosition(), modules[2].getPosition(), modules[3].getPosition() }; }

We can use AdvantageScope's auto-logging feature to track the robot's estimated pose

@AutoLogOutput(key = "Drive/Odometry") public Pose2d getPose() { return odometry.getPoseMeters(); }

AdvantageScope lets you visualize this pose in either 2D or 3D field view. While the 3D view looks more impressive, it does require more computational power.

Notice how the robot passes through objects. This happens because the robot only tracks its estimated coordinates without any awareness of the physical field or obstacles around it. At this stage, there's no physics simulation involved.

Path following

Being able to track the robot's position in space unlocks powerful capabilities. We can command the robot to move to specific coordinates or follow a sequence of waypoints—this is the essence of path/trajectory following.

In practice, odometry is rarely perfect and tends to drift over time. This can be corrected using vision systems (which I'll cover later).

For path following, I'll be using PathPlanner, a tool that offers powerful features for creating and following robot paths.

I'll skip explaining the GUI since it's quite straightforward. Check out their docs for a walkthrough.

First, we need to set up an

AutoBuilder in the constructor of the Drivetrain subsystem. This will handle all the complex calculations for us.AutoBuilder.configureHolonomic( this::getPose, this::resetOdometry, this::getRelativeChassisSpeeds, this::runVelocityRobotRelative, new HolonomicPathFollowerConfig( new PIDConstants(AutoConstants.kPXController, AutoConstants.kIXController, AutoConstants.kDXController), new PIDConstants(AutoConstants.kPThetaController, AutoConstants.kIThetaController, AutoConstants.kDThetaController), AutoConstants.kMaxModuleSpeedMetersPerSecond, DriveConstants.kDriveRadius, new ReplanningConfig() ), () -> { Optional<Alliance> alliance = DriverStation.getAlliance(); return alliance.isPresent() ? alliance.get() == DriverStation.Alliance.Red : false; }, this );

Holonomic refers to omnidirectional drives like swerve. PathPlanner needs to know which alliance side the robot is on since some fields are asymmetrical. Regardless of alliance color, the field's coordinate origin remains in the same position relative to each alliance's driver station.

Let's create a command that drives the robot autonomously to the speaker. We'll call it

GoToSpeakerCmd.public class GoToSpeakerCCmd extends SequentialCommandGroup { public GoToSpeakerCCmd() { PathPlannerPath intoSpeakerCPath = PathPlannerPath.fromPathFile("Into Speaker Center"); PathConstraints pathfindingConstraints = new PathConstraints( AutoConstants.kMaxSpeedMetersPerSecond, AutoConstants.kMaxAccelerationMetersPerSecondSquared, AutoConstants.kMaxAngularSpeedRadiansPerSecond, AutoConstants.kMaxAngularSpeedRadiansPerSecondSquared ); addCommands( AutoBuilder.pathfindThenFollowPath(intoSpeakerCPath, pathfindingConstraints) ); } }

We configure PathPlanner with our robot's physical constraints like maximum speed and acceleration. The software first pathfinds to reach the starting point of the "Into Speaker Center" path, avoiding obstacles along the way. Then it follows that predefined path precisely. Using a fixed final approach path makes the robot's movement more consistent and accurate.

We can bind this command to a button in

RobotContainer to make it run while it's held. This while-held approach is safer than a simple start/stop button.new JoystickButton(controller, 3).whileTrue( new GoToSpeakerCCmd() );

I created similar paths for the amp and human player station. Here's how they look together in AdvantageScope.

Driving in the autonomous period works similarly. Simply create an auto chooser in

RobotContainer and return PathPlanner's generated autonomous command.public RobotContainer() { // more code... autoChooser = new LoggedDashboardChooser<Command>("Auto Routine", AutoBuilder.buildAutoChooser()); SmartDashboard.putData("Auto Routine", autoChooser.getSendableChooser()); // more code... } public Command getAutonomousCommand() { return autoChooser.get(); }

Here's a demonstration of an autonomous routine that still requires tuning

Physics & vision

From this point forward, we'll use the 2025 versions of all libraries. maple-sim has significantly enhanced its swerve simulation capabilities in the 2025 release.

I'll skip explaining the code migration process to the 2025 version, as each package's documentation provides comprehensive upgrade guides.

Physics with maple-sim

So far, our simulation hasn't included any actual physics, which limits its accuracy. maple-sim fills this gap by approximating real-world physics for robot subsystems. It can simulate field and game piece collisions, wheel skidding, gyro drift, and more. While maple-sim doesn't have its own interface, it integrates with AdvantageScope for visualization.

To get started, let's create a simulated field. We'll use maple-sim in Robot to set up our virtual arena. We'll need to track game piece positions so they can be displayed in AdvantageScope

@Override public void simulationPeriodic() { SimulatedArena.getInstance().simulationPeriodic(); List<Pose3d> physNotePositionsList = SimulatedArena.getInstance().getGamePiecesByType("Note"); Pose3d[] physNotePositions = physNotePositionsList.toArray(new Pose3d[physNotePositionsList.size()]); Logger.recordOutput("FieldSimulation/NotePositions", physNotePositions); }

Let's update our

ModuleIOSim to integrate with maple-sim. We'll use maple-sim's SwerveModuleSimulation and SimulatedMotorController classes. The changes are minimal, mainly involving the new unit system introduced in 2025. The only structural change is that the constructor now requires a SwerveModuleSimulation parameter.The

GyroIOSim implementation will be different from before. By using maple-sim's GyroSimulation, we can simulate realistic gyro drift. This new approach is more straightforward than our previous implementation. Like the module simulation, the constructor requires a GyroSimulation parameter.public class GyroIOSim implements GyroIO { private final GyroSimulation gyroSimulation; public GyroIOSim(GyroSimulation gyroSim) { this.gyroSimulation = gyroSim; } @Override public void updateInputs(GyroIOInputs inputs) { inputs.connected = true; inputs.angle = gyroSimulation.getGyroReading(); inputs.rate = gyroSimulation.getMeasuredAngularVelocity().in(Units.RadiansPerSecond); } @Override public void calibrate() { gyroSimulation.setRotation(new Rotation2d(0)); } }

In our Drivetrain subsystem, we create an overloaded constructor that accepts an optional

SwerveDriveSimulation parameter. For safety, we add a check to prevent the simulation from running on a physical robot since it would unnecessarily consume resources.public Drivetrain(ModuleIO flModuleIO, ModuleIO frModuleIO, ModuleIO blModuleIO, ModuleIO brModuleIO, GyroIO gyroIO, ATVisionIO visionIO, SwerveDriveSimulation DriveSim) { this(flModuleIO, frModuleIO, blModuleIO, brModuleIO, gyroIO, visionIO); if (Robot.isReal()) throw new RuntimeException("Do not run simulation on the real robot!"); this.driveSimulation = DriveSim; }

We use this to log the actual robot position so we can view it in AdvantageScope. This value differs from odometry (where the robot thinks it is). The real position is never revealed to the robot's logic.

if (Robot.isSimulation()) { Logger.recordOutput("FieldSimulation/RobotPosition", driveSimulation.getSimulatedDriveTrainPose()); }

Back in

RobotContainer, we need to set up the drivetrain simulation and add it to our virtual field before passing it to the Drivetrain subsystem. This configuration requires several physical constants to be defined.DriveTrainSimulationConfig driveTrainSimulationConfig = DriveTrainSimulationConfig.Default() .withGyro(COTS.ofGenericGyro()) .withSwerveModule(COTS.ofMAXSwerve( DCMotor.getNEO(1), DCMotor.getNeo550(1), COTS.WHEELS.COLSONS.cof, 2)) .withTrackLengthTrackWidth( Units.Meters.of(DriveConstants.kWheelBase), Units.Meters.of(DriveConstants.kTrackWidth) ) .withBumperSize(Units.Meters.of(0.75), Units.Meters.of(0.75)); SwerveDriveSimulation swerveDriveSimulation = new SwerveDriveSimulation( driveTrainSimulationConfig, new Pose2d(2, 2, new Rotation2d(0)) ); gyroIO = new GyroIOSim(swerveDriveSimulation.getGyroSimulation()); driveSub = new Drivetrain( new ModuleIOSim(swerveDriveSimulation.getModules()[0]), new ModuleIOSim(swerveDriveSimulation.getModules()[1]), new ModuleIOSim(swerveDriveSimulation.getModules()[2]), new ModuleIOSim(swerveDriveSimulation.getModules()[3]), gyroIO, swerveDriveSimulation ); SimulatedArena.getInstance().addDriveTrainSimulation(swerveDriveSimulation);

The physics simulation, including collisions, is now functional! The odometry data (in green) becomes increasingly inaccurate over time and loses precision during collisions. This happens because the robot's position estimates—based on gyro angles and wheel movements—lack awareness of the actual field environment and have inherent limitations in accuracy.

Vision with PhotonVision

So far, all our sensor data has been relative to the starting point. As the robot moves, errors in these relative measurements add up, making its position estimate increasingly inaccurate. To fix these errors, we need to know the robot's absolute position. This is where vision comes in - the robot can see AprilTags and calculate its position relative to them. Since we know the absolute positions of these tags on the field, we can determine the robot's absolute position.

Why don't we just use vision and forget about encoders entirely?

Even with multiple cameras on your robot, there will be times when no AprilTags are visible. Regular odometry helps estimate the robot's position between AprilTag sightings. In practice, recognizing AprilTags is challenging, particularly when the robot moves at high speeds.

Like our other subsystems, we'll implement vision using the IO structure. First, let's create an IO interface,

ATVisionIO.public interface ATVisionIO { @AutoLog public static class ATVisionIOInputs { public boolean[] connected; public double latencySeconds; public int targetsCount; public int[] targetIDs; public Transform3d[] cameraToTargets; public Pose3d[] robotPoses; public double[] timestamp; } public default void updateInputs(ATVisionIOInputs inputs) { } public default void setReferencePose(Pose2d prevEstimatedPose) { } }

Next, we'll create a hardware class to handle the implementation. It processes the

robotPoses from the inputs by calculating the average of all valid poses along with their average timestamp.We can then implement the simulated vision IO class. We use PhotonLib's

PhotonCameraSim to simulate a virtual camera view. The implementation is complex due to the nested data structures.In

updateInputs, we detect AprilTags and calculate an estimated pose based on their positions. We then store this pose and related metadata in inputs.The vision simulation needs to know the robot's true position to simulate camera views. We can't use odometry data since that's what we're trying to correct with vision. This is where maple-sim helps by providing the robot's "real" position. We only use this real position to generate simulated camera views—not to estimate the robot's pose, as that would defeat the purpose. On a physical robot, we won't need maple-sim's position data since we'll have actual camera feeds from the hardware.

public ATVisionIOSim( List<Pair<String, SimCameraProperties>> cameraProperties, List<Transform3d> robotToCamera, AprilTagFieldLayout aprilTagFieldLayout, Supplier<Pose2d> trueRobotPoseSupplier) { // more code... trueRobotPoseSup = trueRobotPoseSupplier; } @Override public void updateInputs(ATVisionIOInputs inputs) { visionSystemSim.update(trueRobotPoseSup.get()); // more code...

PhotonVision's vision simulation is too accurate and doesn't account for visual obstructions. In real life, cameras have noise and distortion. While these factors can be corrected for, the results are not perfect.

Finally, we need to incorporate our vision estimates into the robot's positioning system. Instead of using plain odometry, we'll use

SwerveDrivePoseEstimator. This is a drop-in replacement for odometry that adds vision support. To implement this, we need to update the constructor in our Drivetrain subsystem by adding an ATVisionIO parameter and creating an ATVision hardware class, similar to how we set up the swerve modules.public Drivetrain(ModuleIO flModuleIO, ModuleIO frModuleIO, ModuleIO blModuleIO, ModuleIO brModuleIO, GyroIO gyroIO, ATVisionIO visionIO) { modules[0] = new Module(flModuleIO, 0); modules[1] = new Module(frModuleIO, 1); modules[2] = new Module(blModuleIO, 2); modules[3] = new Module(brModuleIO, 3); this.gyroIO = gyroIO; this.vision = new ATVision(visionIO); // more code...

In

periodic, we can call addVisionMeasurement. The vision’s estimated 3D pose is converted into 2D, ignoring the Z axis. We’re assuming robots can’t fly. if (visionPose.isPresent()) { Pose2d visionPose2d = new Pose2d( new Translation2d(visionPose.get().getFirst().getTranslation().getX(), visionPose.get().getFirst().getTranslation().getY()), new Rotation2d(visionPose.get().getFirst().getRotation().getZ()) ); poseEstimator.addVisionMeasurement(visionPose2d, visionPose.get().getSecond()); }

Returning to

RobotContainer, we need to configure and pass our new VisionIO instance into the Drivetrain subsystem.SimCameraProperties cam1Properties = new SimCameraProperties(); cam1Properties.setCalibration(640, 480, Rotation2d.fromDegrees(100)); cam1Properties.setFPS(20); visionIO = new ATVisionIOSim( List.of( Pair.of("camera1", cam1Properties) ), List.of( new Transform3d(new Translation3d(0.1, 0, 0.5), new Rotation3d(0, Math.toRadians(-15), 0)) ), AprilTagFieldLayout.loadField(AprilTagFields.k2024Crescendo), swerveDriveSimulation::getSimulatedDriveTrainPose ); driveSub = new Drivetrain( new ModuleIOSim(swerveDriveSimulation.getModules()[0]), new ModuleIOSim(swerveDriveSimulation.getModules()[1]), new ModuleIOSim(swerveDriveSimulation.getModules()[2]), new ModuleIOSim(swerveDriveSimulation.getModules()[3]), gyroIO, visionIO, swerveDriveSimulation );

Now, let's look at a demo in AdvantageScope!

- The robot you see represents the "real" position calculated by maple-sim

- The green ghost shows where the robot estimates its position using all available data

- The blue ghost indicates the most recent pose estimation from vision

- The yellow line displays both the path-following trajectory and the yellow ghost shows where the robot should be along the trajectory.

As the robot drives, you can observe its pose estimation drifting. The estimation becomes particularly inaccurate during collisions. However, whenever the robot sees a tag, the green ghost instantly corrects to the true position.

That concludes this deep dive! While it's a lengthy exploration, I hope I've made these complex concepts digestible.

You can always reach out to me with your thoughts or questions!